Data Science

Data Science Blog

-

2025 Spring End-of-Year Spotlight Alumni Event

The Spring End-of-Year Spotlight Alumni Event for the MTSU Data Science programs is a celebration of student achievement, alumni success, and the strength of our MTSU data science community. In April, current students, alumni, faculty, and staff gathered to network, socialize and recognize this year’s achievements. The highlight of this event is the focus on…

-

Using AI as a Data Science Research: Changes, Capabilities, and Ethics

In the last two years, we have seen unprecedented progress in the capabilities of large language models. In the last month, several models have emerged that show advanced reasoning capabilities.Â

-

Integrating Health Care Data with HL7: Leveraging Google Cloud and RESTful APIs for Enhanced Interoperability

Explore advanced data engineering techniques in healthcare data management using Google Cloud’s BigQuery and the HL7 standard

-

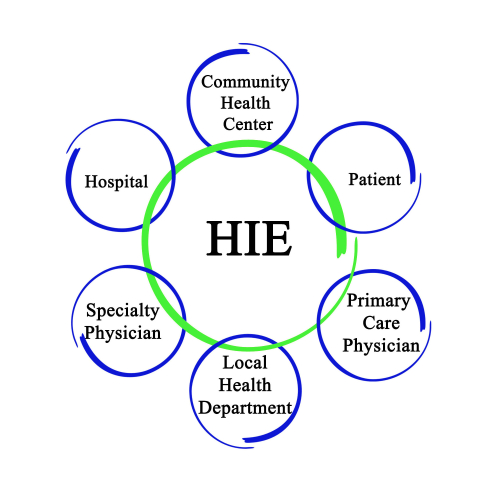

Introduction to Health Information Exchanges (HIE): interoperability in Healthcare and Health Insurance IT

The seamless exchange of healthcare information, facilitated by Health Information Exchange (HIE), is pivotal for enhancing patient care, improving outcomes, and reducing healthcare costs.

-

Reading the Pseudo-code for Transformers

Transformers are the underlying algorithms powering large language models like GPT, Bert, and Llama.

-

Opportunities for actuaries and computational mathematics experts in healthcare industry

Ramzi Abujamra, PhD, ASA, Sr Clinical & Population Health Analyst, Highmark Health will describe opportunities in the health insurance industry from his viewpoint during his career.

-

Using AI-Assisted Writing Productively

The “computational†capabilities of ChatBots are growing exponentially. The interaction modes of ChatBots are rapidly changing.Â

-

ChatGPT Vision vs Real World

I finally got access to the Vision options within ChatGPT.  This feature was enabled on my phone, so I gave it a series of challenges to see how well it works.  The results were impressive, and a bit disturbing.  Check it out.

-

Computational and Data Science Seminar September 15, 2023 with Ph.D. students Momina Liaqat and Richard Hoehn

In this seminar we hear from two Computational and Data Science Ph.D. students, Momina Liaqat and Richard Hoehn.

-

Computational and Data Science Seminar from September 22, 2023 with Dr. Vishwas Bedekar and Dr. Qiang Wu

This seminar is a split seminar. Dr. Vishwas Bedekar will talk about his research in energy harvesting systems at MTSU. Dr. Qiang Wu speaks about the master’s degree in Data Science.

Contact Us

MTSU Data Science

MTSU Box 0499

1301 East Main Street

Murfreesboro, TN 31732

615-898-2122